Cecilia Panigutti

Involved in the research line 1 ▪ 4 ▪ 5

Role: Phd Student

Affiliation: Scuola Normale

27.

[PBF2022]Panigutti Cecilia, Beretta Andrea, Fadda Daniele , Giannotti Fosca, Pedreschi Dino, Perotti Alan, Rinzivillo Salvatore (2022). In ACM Transactions on Interactive Intelligent Systems

Abstract

eXplainable AI (XAI) involves two intertwined but separate challenges: the development of techniques to extract explanations from black-box AI models, and the way such explanations are presented to users, i.e., the explanation user interface. Despite its importance, the second aspect has received limited attention so far in the literature. Effective AI explanation interfaces are fundamental for allowing human decision-makers to take advantage and oversee high-risk AI systems effectively. Following an iterative design approach, we present the first cycle of prototyping-testing-redesigning of an explainable AI technique, and its explanation user interface for clinical Decision Support Systems (DSS). We first present an XAI technique that meets the technical requirements of the healthcare domain: sequential, ontology-linked patient data, and multi-label classification tasks. We demonstrate its applicability to explain a clinical DSS, and we design a first prototype of an explanation user interface. Next, we test such a prototype with healthcare providers and collect their feedback, with a two-fold outcome: first, we obtain evidence that explanations increase users' trust in the XAI system, and second, we obtain useful insights on the perceived deficiencies of their interaction with the system, so that we can re-design a better, more human-centered explanation interface.

Research Line 1▪3▪4

28.

[PBP2022]Panigutti Cecilia, Beretta Andrea, Pedreschi Dino, Giannotti Fosca (2022) - 2022 Conference on Human Factors in Computing Systems. In Proceedings of the 2022 Conference on Human Factors in Computing Systems

Abstract

The field of eXplainable Artificial Intelligence (XAI) focuses on providing explanations for AI systems' decisions. XAI applications to AI-based Clinical Decision Support Systems (DSS) should increase trust in the DSS by allowing clinicians to investigate the reasons behind its suggestions. In this paper, we present the results of a user study on the impact of advice from a clinical DSS on healthcare providers' judgment in two different cases: the case where the clinical DSS explains its suggestion and the case it does not. We examined the weight of advice, the behavioral intention to use the system, and the perceptions with quantitative and qualitative measures. Our results indicate a more significant impact of advice when an explanation for the DSS decision is provided. Additionally, through the open-ended questions, we provide some insights on how to improve the explanations in the diagnosis forecasts for healthcare assistants, nurses, and doctors.

Research Line 4

32.

[PMC2022]

Panigutti Cecilia, Monreale Anna, Comandè Giovanni, Pedreschi Dino (2022) - Deep Learning in Biology and Medicine. In Deep Learning in Biology and Medicine

Abstract

Biology, medicine and biochemistry have become data-centric fields for which Deep Learning methods are delivering groundbreaking results. Addressing high impact challenges, Deep Learning in Biology and Medicine provides an accessible and organic collection of Deep Learning essays on bioinformatics and medicine. It caters for a wide readership, ranging from machine learning practitioners and data scientists seeking methodological knowledge to address biomedical applications, to life science specialists in search of a gentle reference for advanced data analytics.With contributions from internationally renowned experts, the book covers foundational methodologies in a wide spectrum of life sciences applications, including electronic health record processing, diagnostic imaging, text processing, as well as omics-data processing. This survey of consolidated problems is complemented by a selection of advanced applications, including cheminformatics and biomedical interaction network analysis. A modern and mindful approach to the use of data-driven methodologies in the life sciences also requires careful consideration of the associated societal, ethical, legal and transparency challenges, which are covered in the concluding chapters of this book.

45.

[PPB2021]

Panigutti Cecilia, Perotti Alan, Panisson André, Bajardi Paolo, Pedreschi Dino (2021) - Information Processing & Management. In Journal of Information Processing and Management

Abstract

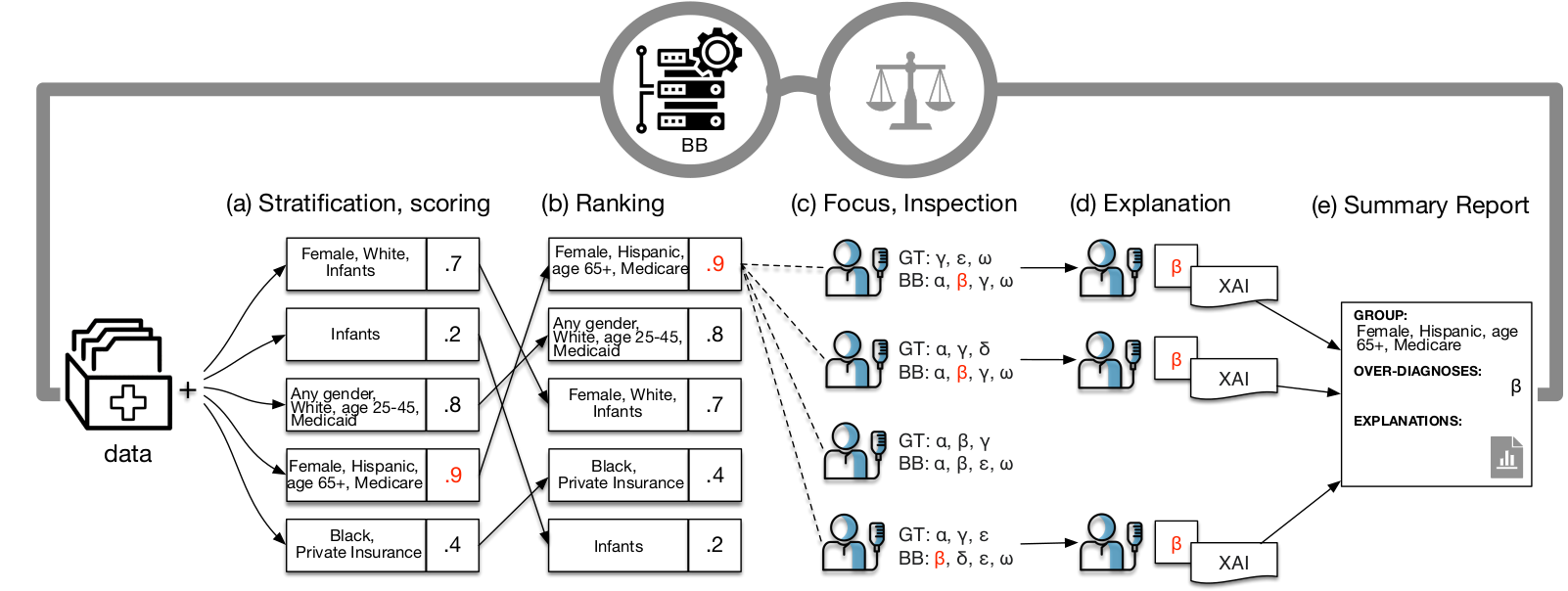

Highlights: We present a pipeline to detect and explain potential fairness issues in Clinical DSS. We study and compare different multi-label classification disparity measures. We explore ICD9 bias in MIMIC-IV, an openly available ICU benchmark dataset

54.

[PGM2019]

Panigutti Cecilia, Guidotti Riccardo, Monreale Anna, Pedreschi Dino (2021) - Precision Health and Medicine. In International Workshop on Health Intelligence (pp. 97-110). Springer, Cham.

Abstract

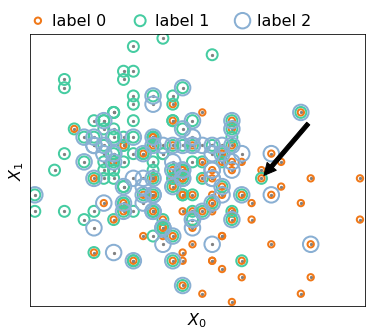

Today the state-of-the-art performance in classification is achieved by the so-called “black boxes”, i.e. decision-making systems whose internal logic is obscure. Such models could revolutionize the health-care system, however their deployment in real-world diagnosis decision support systems is subject to several risks and limitations due to the lack of transparency. The typical classification problem in health-care requires a multi-label approach since the possible labels are not mutually exclusive, e.g. diagnoses. We propose MARLENA, a model-agnostic method which explains multi-label black box decisions. MARLENA explains an individual decision in three steps. First, it generates a synthetic neighborhood around the instance to be explained using a strategy suitable for multi-label decisions. It then learns a decision tree on such neighborhood and finally derives from it a decision rule that explains the black box decision. Our experiments show that MARLENA performs well in terms of mimicking the black box behavior while gaining at the same time a notable amount of interpretability through compact decision rules, i.e. rules with limited length.

57.

[PPP2020]

Panigutti Cecilia, Perotti Alan, Pedreschi Dino (2020) - FAT* '20: Proceedings of the 2020 Conference on Fairness, Accountability, and Transparency. In FAT* '20: Proceedings of the 2020 Conference on Fairness, Accountability, and Transparency

Abstract

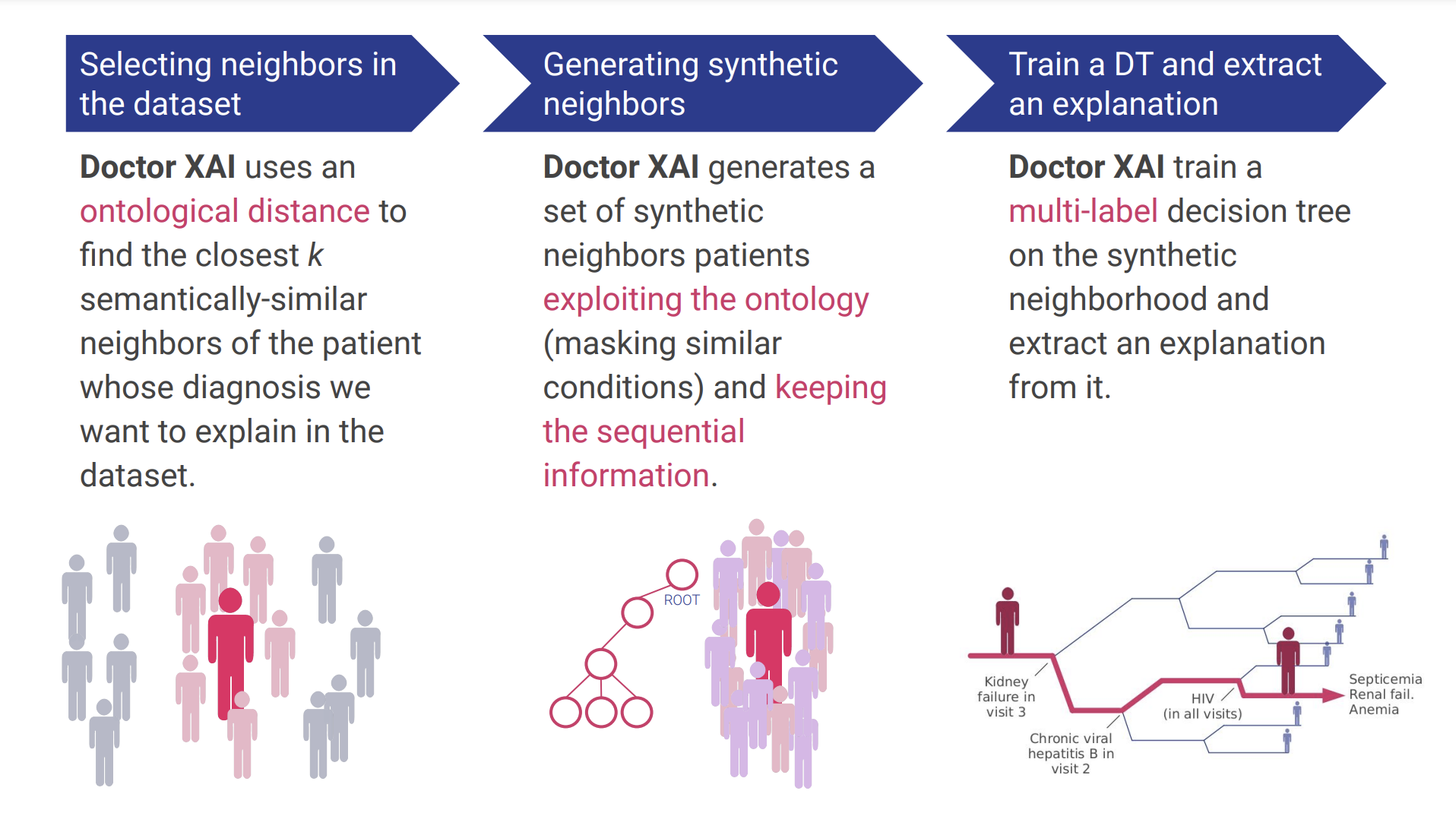

Several recent advancements in Machine Learning involve blackbox models: algorithms that do not provide human-understandable explanations in support of their decisions. This limitation hampers the fairness, accountability and transparency of these models; the field of eXplainable Artificial Intelligence (XAI) tries to solve this problem providing human-understandable explanations for black-box models. However, healthcare datasets (and the related learning tasks) often present peculiar features, such as sequential data, multi-label predictions, and links to structured background knowledge. In this paper, we introduce Doctor XAI, a model-agnostic explainability technique able to deal with multi-labeled, sequential, ontology-linked data. We focus on explaining Doctor AI, a multilabel classifier which takes as input the clinical history of a patient in order to predict the next visit. Furthermore, we show how exploiting the temporal dimension in the data and the domain knowledge encoded in the medical ontology improves the quality of the mined explanations.

62.

[GPP2019]

Giannotti Fosca, Pedreschi Dino, Panigutti Cecilia (2019) - Biopolitica, Pandemia e democrazia. Rule of law nella società digitale. In BIOPOLITICA, PANDEMIA E DEMOCRAZIA Rule of law nella società digitale

Abstract

La crisi sanitaria ha trasformato le relazioni tra Stato e cittadini, conducendo a limitazioni temporanee dei diritti fondamentali e facendo emergere conflitti tra le due dimensioni della salute, come diritto della persona e come diritto della comunità, e tra il diritto alla salute e le esigenze del sistema economico. Per far fronte all’emergenza, si è modificato il tradizionale equilibrio tra i poteri dello Stato, in una prospettiva in cui il tempo dell’emergenza sembra proiettarsi ancora a lungo sul futuro. La pandemia ha inoltre potenziato la centralità del digitale, dall’utilizzo di software di intelligenza artificiale per il tracciamento del contagio alla nuova connettività del lavoro remoto, passando per la telemedicina. Le nuove tecnologie svolgono un ruolo di prevenzione e controllo, ma pongono anche delicate questioni costituzionali: come tutelare la privacy individuale di fronte al Panopticon digitale? Come inquadrare lo statuto delle piattaforme digitali, veri e propri poteri tecnologici privati, all’interno dei nostri ordinamenti? La ricerca presentata in questo volume e nei due volumi collegati propone le riflessioni su questi temi di studiosi afferenti a una moltitudine di aree disciplinari: medici, giuristi, ingegneri, esperti di robotica e di IA analizzano gli effetti dell’emergenza sanitaria sulla tenuta del modello democratico occidentale, con l’obiettivo di aprire una riflessione sulle linee guida per la ricostruzione del Paese, oltre la pandemia. In particolare, questo terzo volume affronta gli aspetti legati all’impatto della tecnologia digitale e dell’IA sui processi, sulla scuola e sulla medicina, con una riflessione su temi quali l’organizzazione della giustizia, le responsabilità, le carenze organizzative degli enti.