LORE Stable and Actionable

Overview

Recent years have witnessed the rise of accurate but obscure classification models that hide the logic of their internal decision processes. Explaining the decision taken by a black-box classifier on a specific input instance is therefore of striking interest.

We propose LORE-SA (LOcal Rule-based Explanations with Stability and Actionability), a local rule-based model-agnostic explanation method providing stable and actionable explanations (Riccardo et al., 2018; Guidotti et al., 2022).

Key Features

An explanation provided by LORE-SA consists of:

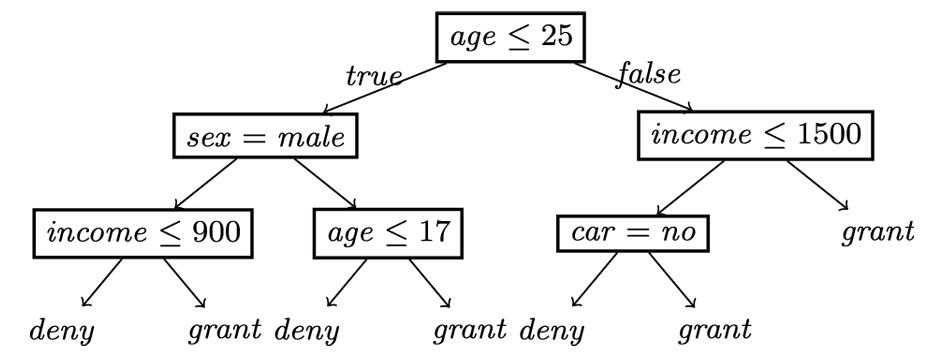

- Factual logic rule: States the reasons for the black-box decision on the specific instance

- Actionable counterfactual logic rules: Proactively suggest changes to the instance that would lead to a different outcome

These features make LORE-SA particularly valuable for real-world applications where understanding “why” and “what if” questions are crucial for decision-making.

Methodology

Explanations are computed from a decision tree that mimics the behavior of the black-box locally to the instance under investigation. The approach follows these key steps:

- Neighborhood Generation: Synthetic neighbor instances are generated through a genetic algorithm whose fitness function is driven by the black-box behavior

- Ensemble Learning: An ensemble of decision trees is learned from neighborhoods of the instance under investigation

- Tree Merging: The ensemble is merged into a single decision tree through a bagging-like approach that favors both stability and fidelity

This innovative methodology ensures that explanations remain consistent across similar instances while maintaining high fidelity to the original black-box model’s behavior.

Results

Extensive experiments demonstrate that LORE-SA advances the state-of-the-art towards a comprehensive approach that successfully covers:

- Stability: Consistent explanations across similar instances

- Actionability: Practical counterfactual suggestions for decision change

- Fidelity: Accurate representation of the black-box model’s local behavior

- Interpretability: Human-understandable logic rules

The method provides a balanced solution for factual and counterfactual explanations, making it a powerful tool for understanding and interacting with complex classification models.